Robots are no longer limited to repeating the same movements over and over. Thanks to foundation models, robots can now learn from large amounts of data, adapt to new environments, and perform many different tasks.

In this post, we’ll explore what foundation models are and how they are used in robotics—especially for trajectory control and generalized robotics applications.

What Are Foundation Models?

A foundation model is a large machine learning model trained on huge and diverse datasets. Instead of learning just one specific task, these models learn general patterns that can be reused in many situations.

Examples include models that understand:

- Text

- Images and video

- Robot sensor data (cameras, force sensors, joint positions)

Once trained, foundation models can be adapted to new tasks with much less extra training.

Simple idea: Foundation models give robots a general understanding of the world, rather than teaching them only one fixed skill.

What Is Trajectory Control in Robotics?

Trajectory control is about deciding how a robot should move over time. This includes:

- Where the robot should go

- How fast it should move

- How smooth and safe the motion should be

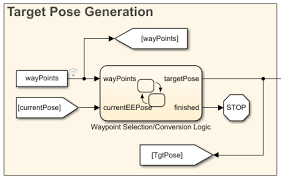

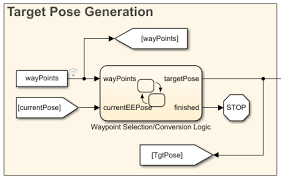

For example, a robotic arm picking up a cup must move from its starting position to the cup smoothly, without hitting anything. That planned path is called a trajectory. A trajectory is typically planned between poses, reference positions based on the degrees of freedom and movement capabilities of the robot.

How Foundation Models Help With Trajectory Control

Traditional robots rely on carefully programmed rules and mathematical equations. Foundation models take a more flexible, data-driven approach.

They help with trajectory control by:

- Learning from demonstrations

Robots can watch humans or other robots perform tasks and learn good movement patterns. - Adapting to new situations

If an object moves or the environment changes, the robot can adjust its trajectory instead of failing. - Handling uncertainty

Real-world sensors are noisy and imperfect. Foundation models can still make reasonable movement decisions.

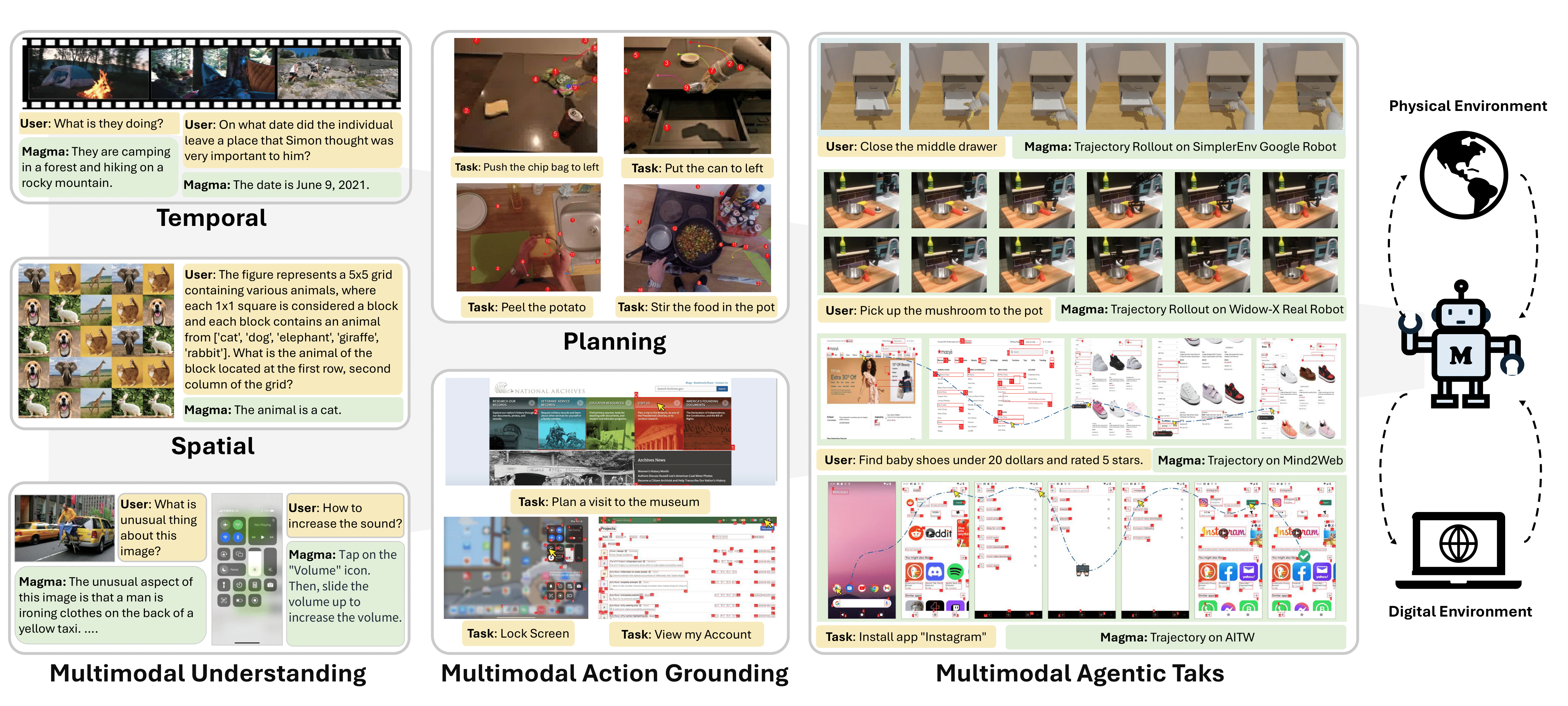

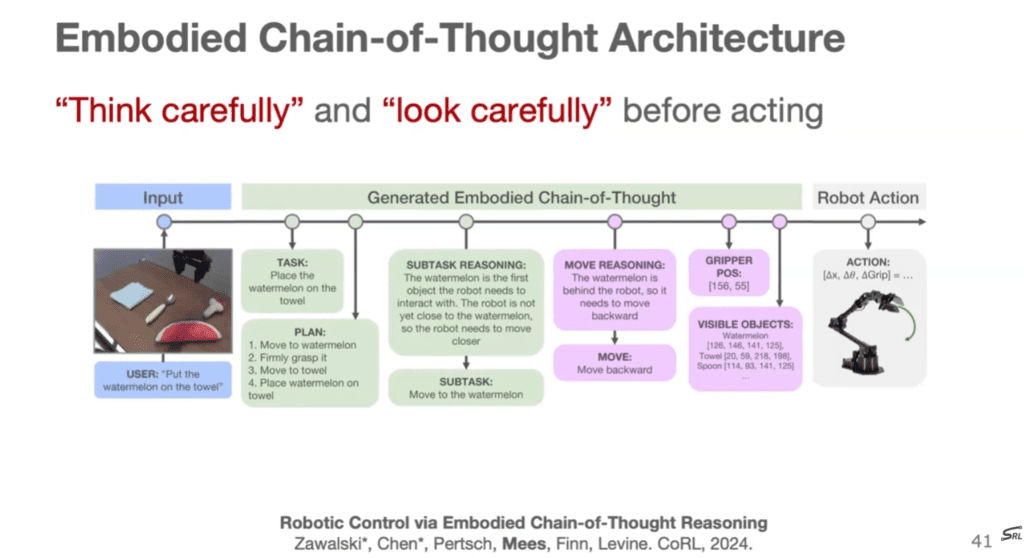

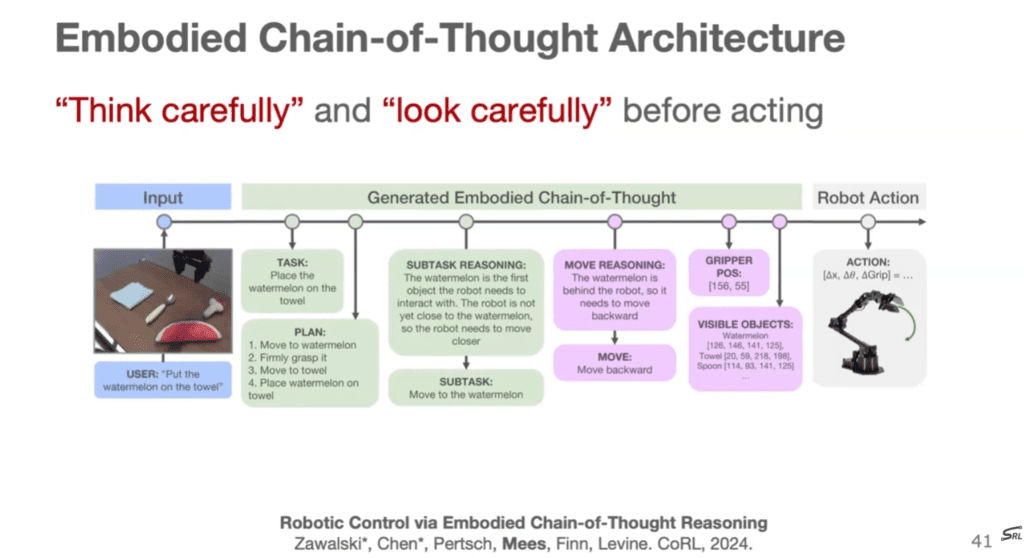

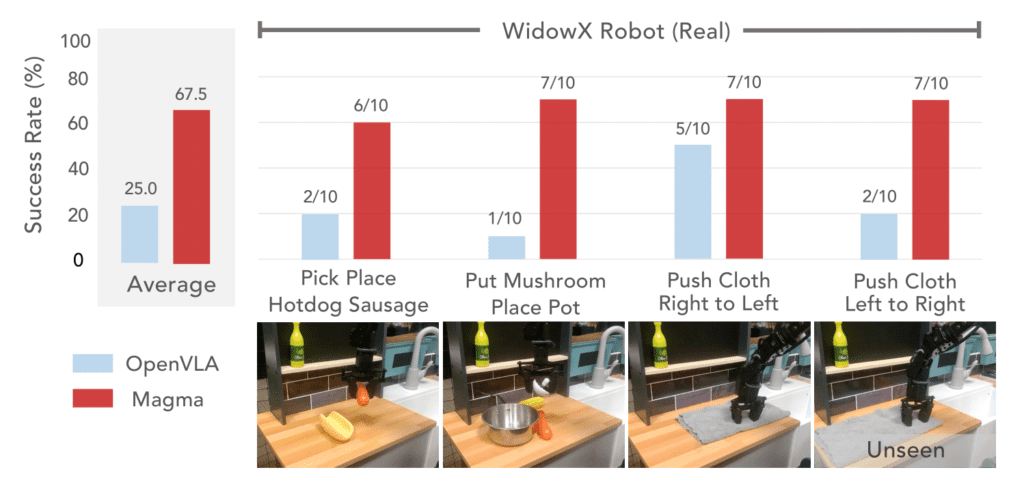

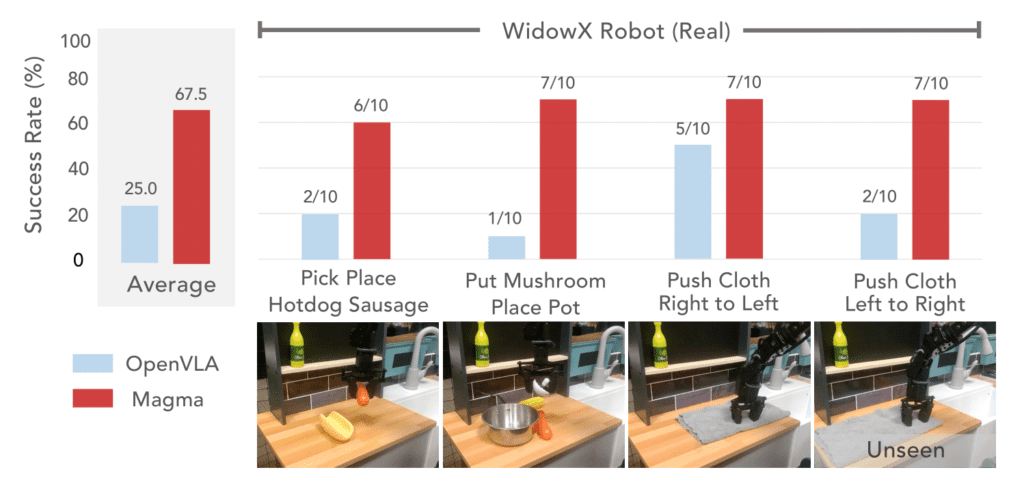

Instead of calculating every movement from scratch, the model predicts useful motions based on past experience. Microsoft shared research in their magma project, which bridges verbal, spatial and temporal intelligence to navigate complex tasks and settings across digital and physical world.:

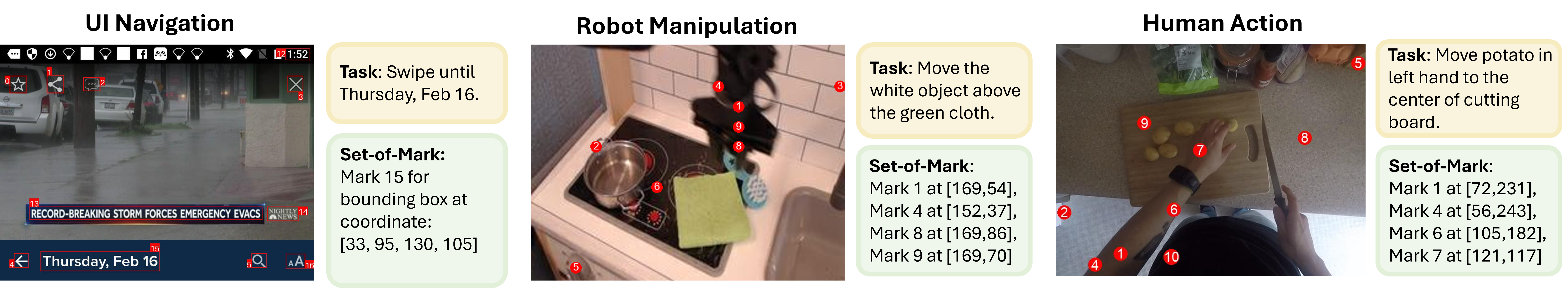

Set-of-Mark (SoM) for Action Grounding. Set-of-Mark prompting enables effective action grounding in images for both UI screenshot (left), robot manipulation (middle) and human video (right) by having the model predict numeric marks for clickable buttons or robot arms in image space.

Trace-of-Mark (ToM) for Action Planning. Trace-of-Mark supervisions for robot manipulation (left) and human action (right). It compels the model to comprehend temporal video dynamics and anticipate future states before acting, while using fewer tokens than next-frame prediction to capture longer temporal horizons and action-related dynamics without ambient distractions.

With foundation models, semantic goals formed by the model are translated to concrete robotics trajectories.

Generalized Robotics Applications

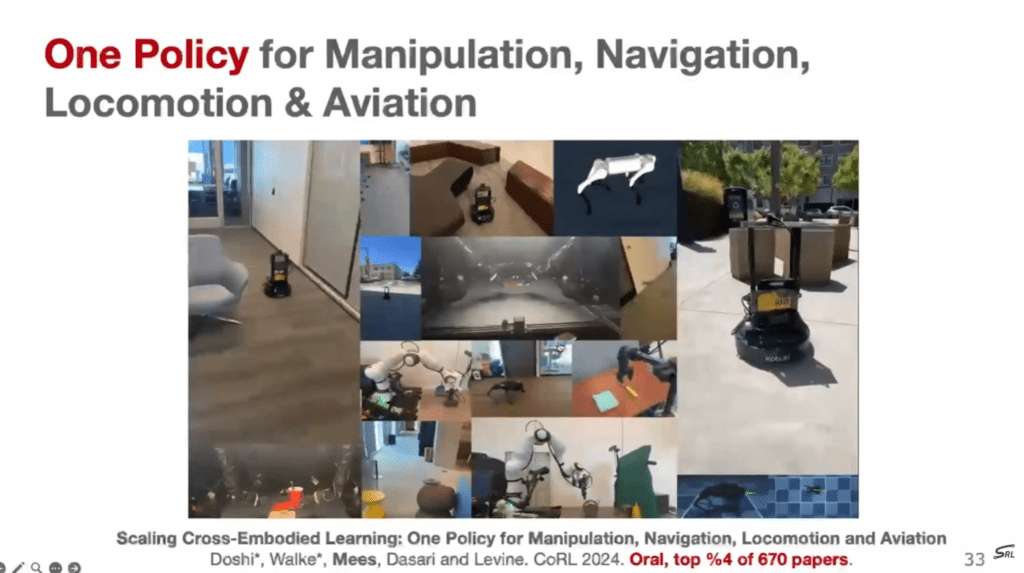

One of the biggest advantages of foundation models is generalization. This means a robot trained on some tasks can perform many different tasks without being reprogrammed each time.

Examples include:

- Manipulation

Picking up, rotating, and assembling objects with different shapes and sizes. - Navigation

Moving through rooms, warehouses, or outdoor spaces without detailed maps. - Human-robot interaction

Understanding instructions like “pick up the blue object and place it on the table.”

A single foundation model can often support many robot skills, instead of needing one model per task.

What Is a Policy in Robotics?

In robotics, a policy is simply a rule that tells the robot what action to take in a given situation.

You can think of it like this:

Policy = “Given what I see right now, what should I do next?”

More formally:

- The robot observes the world (camera images, sensor readings, joint positions, etc.)

- The policy takes those observations as input

- The policy outputs an action (move joints, apply force, drive forward, grasp an object)

If a robot sees:

- An object is 20 cm in front of it

- Its arm is slightly to the left

The policy might say:

- “Move the arm forward and slightly right”

This decision-making function is the robot’s policy.

Traditional Robotics Policies

Traditionally, policies were:

- Hand-written rules

- Carefully designed equations

- Trained for one specific task

For example:

- One policy for picking up a cup

- A different policy for opening a drawer

- Another policy for navigation

Each policy worked well, but only in a narrow situation.

What Does “One Policy” Mean?

When people talk about “one policy”, they mean:

A single policy that can handle many different tasks, environments, and situations.

Instead of switching between many specialized controllers, the robot uses one unified decision-making system.

How Foundation Models Enable One Policy

Foundation models make this possible because they learn general patterns, not just task-specific rules.

Foundation models are trained on:

- Many tasks

- Many environments

- Many robot states and actions

Because of this, the model learns:

- How objects behave

- How robot motions relate to outcomes

- How tasks are similar to each other

This allows one policy to cover many behaviors.

Shared Representation of the World

A foundation model builds a shared internal representation of:

- Vision (what the robot sees)

- Language (what it’s told to do)

- Proprioception (how its body feels)

Because everything is represented together:

- The same policy can pick, place, push, or navigate

- The task changes, but the policy stays the same

Only the input changes.

Conditioning on Goals or Instructions

Instead of hard-coding the task, the policy can be conditioned on:

- A goal (e.g., target position)

- A language instruction (“put the block in the box”)

- A task embedding

So the policy becomes:

“Given what I see and what I’m asked to do, what action should I take?”

This allows one policy to perform many tasks.

4. Generalization to New Situations

Because foundation models learn broad patterns:

- They can handle new objects

- They can adapt to new layouts

- They can recover from small mistakes

This means the same policy works even when the environment changes.

Intuitive Analogy

Think of a human:

- You don’t have a separate “policy” for opening every door

- You use one general decision-making ability

- You adapt based on what you see and what you want to do

Foundation models aim to give robots a similar kind of general-purpose policy.

Why One Policy Is Powerful

Using one foundation-model-based policy means:

- Less hand-engineering

- Easier scaling to new tasks

- More robust real-world performance

This is why many modern robotics systems aim for:

- One model

- One policy

- Many tasks

Why This Matters

Foundation models make robots:

- More flexible

- Faster to deploy in new environments

- Better at handling real-world complexity

This is especially important for applications like home robots, warehouse automation, healthcare, and exploration.

Conclusion

Foundation models are transforming robotics by giving machines a broader understanding of movement, environments, and tasks. From smoother trajectory control to more general-purpose robot behavior, these models help robots move beyond rigid programming.

As foundation m