In this post, we’ll cover how a robot knows where it is which allows it to move around its environment and specifically to a goal destination. Additionally we provide numerous image representations of how a robot represents the world and navigates with in it. So, how do robots know where they are?

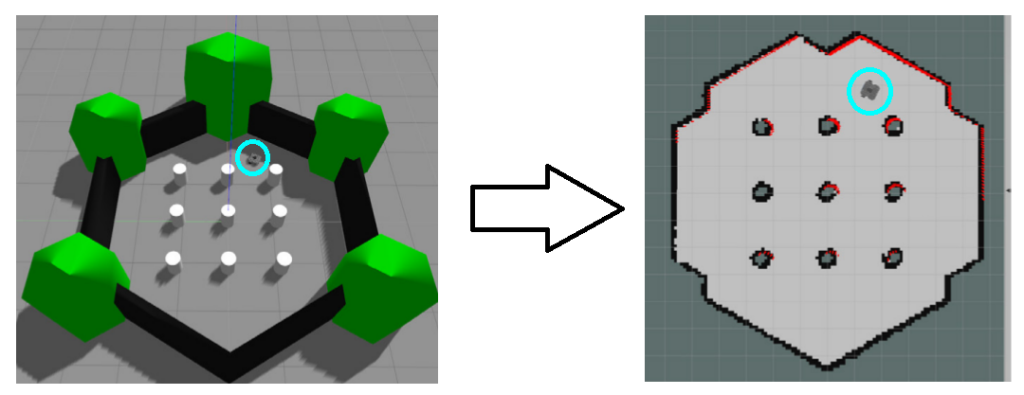

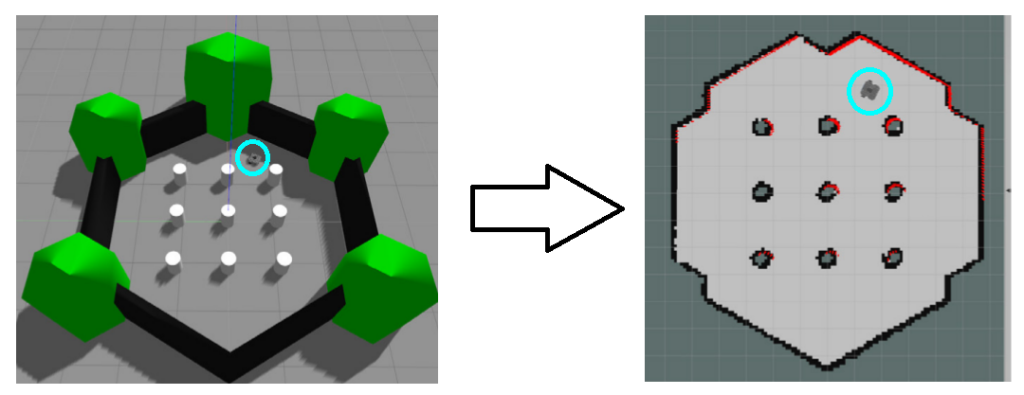

When a robot moves around in the world, it normally moves in an environment it has seen before. From our previous post, robots can see the world around them using sensors such as cameras or LiDARs. A robot can save information about where it can and cannot go in a map. In robotics, mapping is how a robot estimates positions of features in the environment and saves them so it can easily move around in that environment in the future. Below is an example of a robot map, with the environment on the left, and the map on the right, with the robot highlighted in blue:

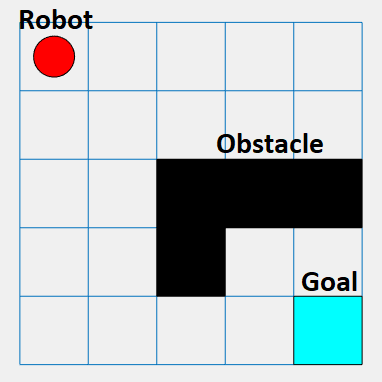

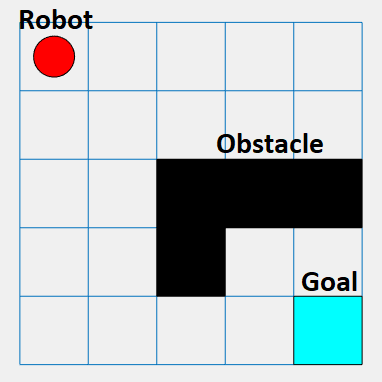

An example map for a robot is known as an occupancy grid, a common format for robots navigating in a 2-D space, with grid cells indicating if a robot can move there (free space) or is blocked by an obstacle (such as a wall):

The process of how a robot figures out where it is on the map is known as localization: knowledge of position of a robot given features of a known position. The term for where exactly the robot is on the map and in space is the pose: representation of position and orientation. A 2D Pose is (x, y, theta), where theta is the angle the robot is facing. Consider car on grid graph, if it starts one way and we want it to end another way, we need to describe where it is (x,y) and which direction it is facing (theta) within a coordinate frame (term that describes the relative position of the robot):

While a robot can know where it is (its pose) in a given a map of the environment, its also important for a robot to be able to navigate in new, unfamiliar environments. When a robot doesn’t have a map already, it needs to create a map. A map can be created by sensing the environment and saving key landmark features that it sees, such as walls or doors. The process by which the robot can learn its environment and know where it is while building its map is known as SLAM: Simultaneous localization & mapping. We can visualize SLAM below, notice as the robot moves around the space, it uses its sensors to both avoid walls and detect/save where they are:

Next, lets consider if a robot was turned on in an environment it has a map for. How would it know where it is? If inside a building with many rooms, how would it know which room exactly it was in? When a robot is faced with a probability based question such as this where there are multiple possible answers (am I in room 1? room 2? room 3? the hallway?), rather than decide it is in a given room, it determines the probability (chance) it is in a given room. This technique is known as particle filtering.

With particle filtering, we distribute a number of particles across the world representation, where each particle is a hypothesis (guess) that we are in a given place. The density of particles is representative of the underlying probabilistic distribution that we are in a given spot, so high density of particles reflects a high probability we are in a given place. We update particles based on measurement data from sensors, and too unlikely hypotheses are eliminated. Likely hypotheses are “replicated” in local neighborhood (area) so that the total number of particles remain the same, we simply move around our guesses based on observations from the environment. This means if we see we are in a hallway, we can narrow down our guess of where we are to somewhere in the hallway instead of a room. The process is repeated until the distribution stabilizes, meaning our particles are group around a single area, meaning there is a high probability (certainty) that the robot is in the given spot.

In the particle filter visualization below, the blue lines represent the robot sensing the environment, and the red dots (particles) represent where in the environment the robot thinks it is. Initially, the robot can be anywhere on the map, so red dots are all over the map (equal probability each particle is in each position of the map). Then, as the robot moves around the environment and sees different features such as walls at different distances, it can rule out where it is (if it was in a small room, it could not be in the long corridor and vice versa). This changes the probabilities that it is in a given spot based on what it has seen, changing where the red dots are distributed in the map. The more it moves, the more accurate it can be on where it believes it is as a result of the probability it is in a given spot increasing due to the features in the environment it has seen:

Sensors are great for allowing a robot to see the environment around it, but they are not perfect, and may introduce error. An important tool in the robotic toolkit the manage this error & noise is the Kalman Filter. The Kalman Filter allows us to model uncertainty with an equation, have a predication step to incorporate noise, and an update step refine predicted vs measured sensor readings based on accuracy of either model or measurement (Kalman Gain). This is an advanced topic and can be read up on more here.

Now that we know how a robot knows where it is as a result of sensing the environment and storing the results in a grid based format, while using techniques such as particle filtering for localization, we can move on to learning how a robot decides what to do (planning).